Security of LLM Agents

Faculty

Postdoctoral researcher

Students

- Evan Rose, PhD student

- Georgios Syros, PhD student

- Tushin Mallick, PhD student

- Evan Li, undergraduate student

Project description

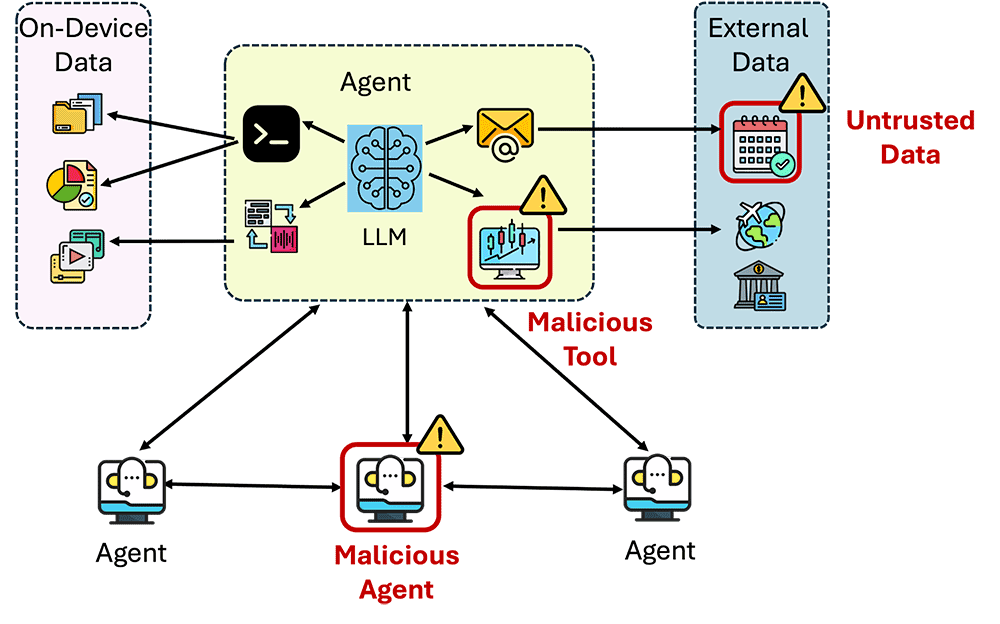

AI agents with increased levels of autonomy are being deployed in safety critical applications, such as healthcare, finance, and cybersecurity. These agents, built on top of large language models (LLMs), are becoming increasingly competitive at automating complex tasks performed by humans, by leveraging access to tools installed on user devices, external resources, and interacting with other AI agents autonomously. These agents might get access to personal sensitive information, such as email, calendar, photos, and location, especially when running on user devices. Unfortunately, the enhanced functionality of AI agents broadens the attack surface of agentic systems, which includes multiple security risks: prompt injection, agent hijacking, propagating malware, and leakage of sensitive user data. Several challenges in securing AI agentic systems remain, including providing secure mechanisms for agent discovery and communication, agent oversight, and protection against attacks such as prompt injection.

SAGA: A Security Architecture for Governing AI Agentic Systems. We propose SAGA, a framework for governing LLM agent deployment, designed to enhance security while offering user oversight on their agents’ lifecycle. SAGA employs a centralized Provider that maintains agents’ contact information and user-defined access control policies, enabling users to register their agents and enforce their policies on inter-agent communication. SAGA introduces a cryptographic mechanism for deriving access control tokens, that offers fine-grained control over an agent’s interaction with other agents, providing formal security guarantees. We evaluate SAGA on several agentic tasks, using agents in different geolocations, and multiple on-device and cloud LLMs. SAGA scales well and can support millions of agents while maintaining minimal overhead throughout the entire agent lifecycle, from registration to deactivation.

A Security Architecture for LLM-Integrated App Systems. LLM-integrated app systems extend the utility of Large Language Models (LLMs) with third-party apps that are invoked by a system LLM using interleaved planning and execution phases to answer user queries. These systems introduce new attack vectors where malicious apps can cause integrity violations of planning or execution, availability breakdown, or privacy compromise during execution. We propose Abstract-Concrete-Execute (ACE), a new secure architecture for LLM-integrated app systems that provides security guarantees for system planning and execution. Specifically, ACE decouples planning into two phases by first creating an abstract execution plan using only trusted information, and then mapping the abstract plan to a concrete plan using installed system apps. We verify that the plans generated by our system satisfy user-specified secure information flow constraints via static analysis on the structured plan output. During execution, ACE enforces data and capability barriers between apps, and ensures that the execution is conducted according to the trusted abstract plan. Our architecture represents a significant advancement towards hardening LLM-based systems using system security principles.

Relevant publications

- Georgios Syros, Anshuman Suri, Jacob Ginesin, Cristina Nita-Rotaru, and Alina Oprea. SAGA: A Security Architecture for Governing AI Agentic Systems. https://arxiv.org/pdf/2504.21034. NDSS 2026

- Evan Li, Tushin Mallick, Evan Rose, William Robertson, Alina Oprea, and Cristina Nita-Rotaru. ACE: A Security Architecture for LLM-Integrated App Systems. https://arxiv.org/pdf/2504.20984. NDSS 2026