Ryan Rad (He/Him)

Assistant Teaching Professor

Khoury College of Computer Science

Northeastern University

410 W Georgia, 15th Floor Vancouver, BC

Land of: xʷməθkʷəy̓əm, Sḵwx̱wú7mesh, and səlilwətaɬ

About

Dr. Ryan Rad is an assistant professor in the Khoury College of Computer Sciences at Northeastern University in Vancouver. In industry, he worked as a researcher, engineer, team lead, and product manager for several startups and companies, including Microsoft. Ryan has led several data analytics and machine learning research projects delivering innovative, responsible, and cutting-edge AI solutions to the enterprise, leveraging machine learning and computer vision to benefit communities from healthcare to sustainability.

He served as a technical committee member or speaker for several top-tier computer science journals and conferences including IEEE TIP, IEEE TMI, ICCV, ICIP, VCIP, AAAI, and MICCAI. Ryan primarily teaches Machine Learning, Algorithms, Software Engineering, and Data Analytics & visualization. His current research focus lies at the intersection of computer vision and AI/ML, with a strong emphasis on environmental sustainability.

Teaching

Quarters are arranged in reverse-chronological order, with the latest quarter on the left.

| Course | Quarter(s) |

|---|---|

| CS 7980 – Capstone | Spring 2024 |

| CS 7675 – Master's Research | Fall 2023 |

| CS 6140 – Machine Learning | Summer 2023 - Fall 2022 |

| CS 5800 – Algorithms | Spring 2024 - Fall 2023 (3x) - Fall 2022 (2x) |

| CS 5500 – Foundations of Software Engineering | Summer 2022 |

AIMES (AI for Monitoring Earth and Sustainability) Lab

News!

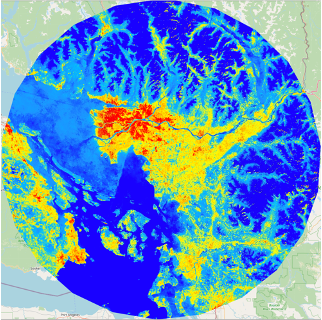

Improved Landcover Classification with Vision Transformer

This project addresses the need for precise landcover classification in the context of climate change modeling using Vision Transformers. By effectively capturing complex spatial-spectral relationships in multi-spectral satellite imagery, this approach improves accuracy despite limited data availability. Through rigorous experimentation, the study demonstrates the potential of Vision Transformers to classify landcover efficiently, contributing to more accurate climate change models and environmental insights.

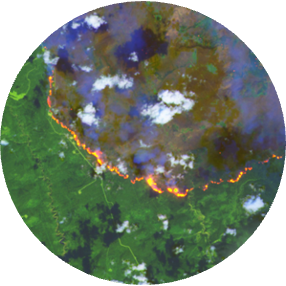

Remote Wildfire Detection with Satellite Imagery and Vision Transformers

This research aims to detect wildfires in remote North American areas using satellite imagery and vision transformers. By leveraging a vast Landsat-8 dataset, the study explores the effectiveness of these technologies in timely wildfire detection. The approach focuses on enhancing the accuracy of discriminating between wildfire and non-wildfire regions by utilizing vision transformers' capabilities in capturing spatial relationships. The results demonstrate the method's promise in accurately identifying remote wildfires, contributing to improved wildfire management strategies.

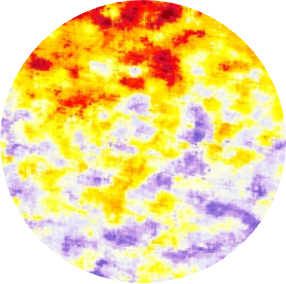

Carbon Mapping and Forest Sequestration Potential Prediction

Leveraging satellite imagery and AI, this project focuses on accurately quantifying carbon emissions and identifying optimal reforestation areas. By employing advanced machine learning techniques and analyzing decades' worth of satellite data, the project aims to enhance carbon sequestration capacity, restore ecosystems, and mitigate deforestation's long-term effects.

Equitable Climate Action and Impact on Marginalized Communities

This initiative explores the differential impact of climate change on historically marginalized groups. Utilizing satellite imagery and AI, the project aims to bridge knowledge gaps, quantify climate change effects, and empower underrepresented communities. Through proactive wildfire prediction models and restorative efforts like forest carbon mapping, the project fosters environmental resilience and inclusion.

Graduate Researchers

David (Zhiyuan) Yang

Global sustainability is increasingly reliant on solar energy. However, effectively monitoring solar farms and accurately assessing our progress in transitioning towards this renewable energy source remains a challenge, especially on a global scale. This study introduces SSSwin, a novel vision transformer model specifically developed to improve the mapping of solar panel farms using satellite imagery. The cornerstone of SSSwin is its Sequential Spectral Embedding module, uniquely designed to address the three-dimensional aspects of multispectral satellite images, enabling intricate capture of spatial-spectral data. To validate its effectiveness, we incorporated our new module into the design of two state-of-the-art models: UPerNet-SwinB and Mask2Former-SwinB. The experimental results demonstrate that this integration enhanced both of their performance without compromising efficiency.

Mia (Yanting) Zheng

Gross Primary Productivity (GPP) is a critical measure of carbon uptake by terrestrial vegetation, essential for understanding the global carbon cycle and developing climate mitigation strategies. This study introduces a novel approach to estimating annual GPP in Europe and North America using a deep tabular model that integrates remote sensing data from Google Earth Engine with the FT-Transformer architecture. We utilized transfer learning to pre-train the model on extensive MOD17A2H/A3H (MOD17) data and fine-tuned it with FLUXNET station data, addressing the scarcity of in-situ measurements. Our results demonstrate that the proposed model provides more accurate GPP estimates compared to traditional MOD17 values, reflecting a closer alignment with FLUXNET observations. This enhanced accuracy highlights the model's ability to capture complex ecological and climatic interactions, offering a promising tool for advancing our understanding of terrestrial carbon dynamics.

Bronte Sihan Li

The recent surge in wildfire incidents, exacerbated by climate change, demands effective prediction and monitoring solutions. This study introduces a novel deep learning model, the Attention Swin U-net with Focal Modulation (ASUFM), designed to predict wildfire spread in North America using large-scale remote sensing data. The ASUFM model integrates spatial attention and focal modulation into the Swin U-net architecture, significantly enhancing predictive performance on the Next Day Wildfire Spread (NDWS) benchmark. Additionally, we have developed an expanded dataset that encompasses wildfire data across North America from $2012$ to $2023$, providing a more extensive basis for model training and validation. Our approach demonstrates state-of-the-art performance in wildfire spread prediction, offering a promising tool for risk mitigation and resource allocation in wildfire management.