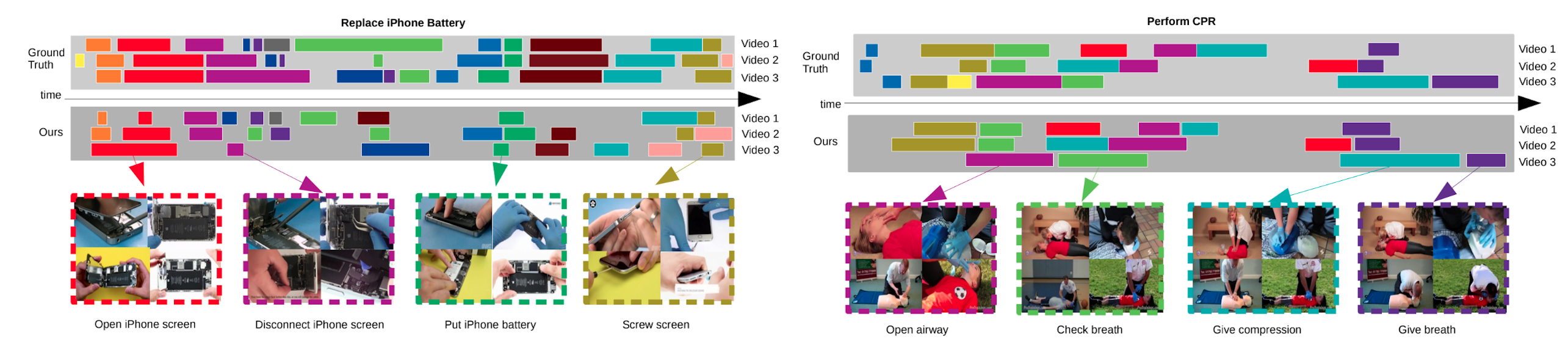

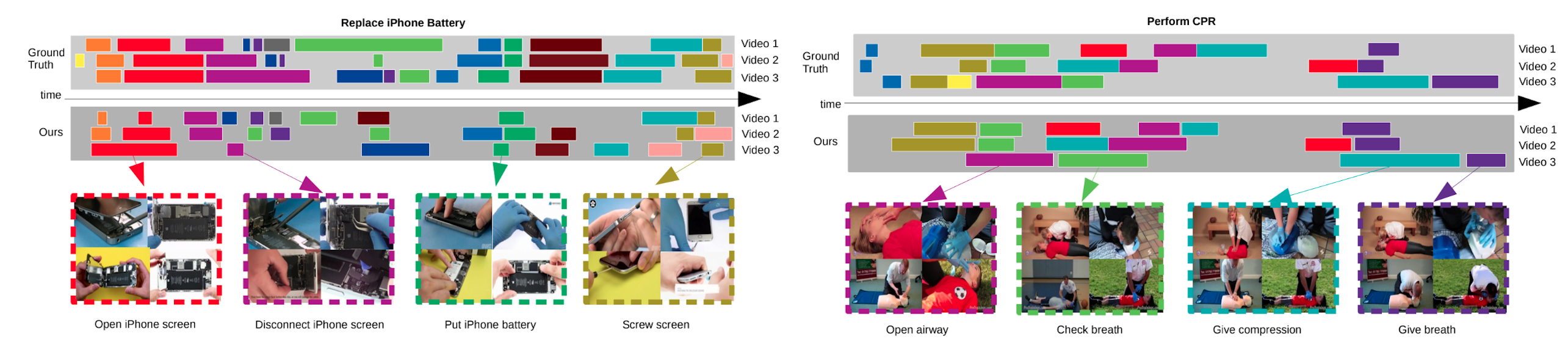

ProceL is a multi-modal procedural learning dataset, consisting of visual and language data, for research on instructional video understanding.

The dataset consists of 47.3 hours of videos from 12 diverse tasks (720 videos). The tasks include detailed-oriented tasks with visually similar key-steps, such as tie a tie and assemble clarinet, as well as tasks that do not involve interacting with physical objects, where some steps involve interacting with a virtual environment, such as set up Chromecast.

For every task, about 60 videos are collected from YouTube and a grammar of procedure key-steps is built by annotators. Videos of each task are annotated with the beginning and ending time of each key-step in the grammar. In addition, the dataset includes spoken instructions generated that are manually cleaned after obtaining them from Automatic Speech Recognition (ASR) on YouTube.

Under the dataset folder, the annotations for different tasks are stored in separate folders. Within each folder, there are two files: readme.txt and data.mat

The readme.txt file stores the informations of the videos of each task. In each line, the first part is the youtube url to a video, the following two numbers are the beginning and ending times that we used to trim the video. Notice that the information in data.mat is based on the trimmed videos.

data.mat stores a matlab structure containing the annotations of the videos. The structure has the following format:

| Key | Value |

|---|---|

| grammar | All possible key-steps of the grammar of the task (e.g., the grammar of the task 'assemble clarinet' has 16 key-steps).

Notice that each video may contain a subset or all of these key-steps. |

| segment_frame | A structure with 60 cells corresponding to 60 videos.

For example, segment_frame{1} shows a Tx2 matrix corresponding to video 1 segmentation, where the 1st and 2nd entry on each row shows the start and end of the segment. |

| segment_time | The same as "segment_frame" based on time instead of frame. |

| key_steps_frame | A structure with 60 cells corresponding to 60 videos.

For example, key_steps_frame{1} shows in which frame in video 1 each element in the grammar has been observed. You may see key_steps_frame{1}{1} is empty, which means that the first element in the grammar does not appear in video 1.

Also, key_steps_frame{1}{2} returns [5857 5934] showing that the 2nd element in the grammar appears in video 1 from frame 5857 to 5934.

Notice that for some videos, a key-step might appear multiple times, in which case, key_steps_frame{i}{j} (for video i and element j in grammar) returns a matrix, where each row indicates the start and end time of the key-step j in the video i. |

| key_steps_time | The same as "key_step_frame" based on time index instead of frame. |

| key_steps_segment | The same as "key_step_frame" based on the segments provided in the dataset instead of frames. |

@article{JointSeqFL:ICCV19,

author = {Elhamifar, Ehsan and Naing, Zwe},

title = {Unsupervised Procedure Learning via Joint Dynamic Summarization},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2019}

}